DATA-DRIVEN INSIGHTS AND NEWS

ON HOW BANKS ARE ADOPTING AI

What we learned at the Evident AI Symposium

26 November 2024

TODAYS BRIEF

We’re back in London after a whistlestop tour through Paris, Toronto, and New York. Capping it off, over 300 senior leaders from banking and AI joined us in Midtown Manhattan last Thursday for the third Evident AI Symposium.

For those of you who couldn’t attend the Symposium, we’ve archived all 380 minutes of the event here. For those pressed for time, here are 20 minutes of curated highlights.

Today in the Brief, we’ll draw on the larger themes and big questions from the day to give our best sense of where we are now.

The Brief is 1,969 words, an 8 minute read. If it was forwarded to you, please subscribe. Find out more about our membership offers here. We want to hear from you: [email protected].

– Alexandra Mousavizadeh & Annabel Ayles

THANKS TO OUR SPONSORS

TALK OF THE TOWN

JPMC’S RETURN ON ‘SUPER USERS’

The hot topic at last year’s Evident Symposium was talent and testing. Six months ago, it was use cases moving into production. Today, the focus is clearly on measuring ROI – where banks are seeing it, how banks capture it, and what (if anything) they can share.

Even before the program started, we asked when we’ll start seeing AI deliver “meaningful” value for banks. The room was bullish. Half reported we’re already there. Another 47% believe it will happen in the next one to two years.

ROI Bulls

Question: When do you think AI will start delivering meaningful value for the banking sector?

Our opening keynoter, JPMC’s Chief Data & Analytics Officer Teresa Heitsenrether, said that while this is a “multi-year journey,” the bank is seeing steady value creation. The slope of that trajectory is determined by how quickly the bank demystifies the technology across the wider organization, and demonstrates the most effective ways to work with it. Identify the 10-20% of “super users” that can connect the dots between the tech and ops, she said. “The AI experts we have in-house are eager to understand the business,” she added. “They’re eager to create value for the firm. That combination is what actually creates the real leverage.”

Take the bank’s LLM Suite tool, which is supposed to provide 200,000 employees with a digital assistant. The legal department uses it to provide a quick synopsis of dense contracts or regulatory guidance, while Heitsenrether uses it to “red team” business reviews before briefing her boss Jamie Dimon.

LATEST FROM THE EVIDENT AI INDEX

MORE ROI SKIN, PLEASE

But there’s still a disconnect between what banks see internally and share publicly. While all 50 Index banks now document AI use cases in some way, shape, or form, barely half (26 to be exact) describe associated outcomes (e.g. productivity gains, equivalent labor saved, customer satisfaction). Of these, only six disclose outcomes in dollar terms, whether in terms of cost savings or revenue lift.

Not That Usefully Open

While all 50 banks now report on use cases, only 26 include associated outcomes...

Looking at this chart, Bloomberg’s Erik Schatzker asked what everyone was thinking: “Should the cynic inside me be asking – well, is the reason we’re not seeing a lot of specificity on business cases because there isn’t very much of it?”

In response, Evident Co-founder Annabel Ayles pointed out that while that might well be the case – after all, we’re only in the foothills of AI adoption – reporting on ROI requires a significant level of internal investment to determine what the ROI metrics should be and how to calculate them, and then how to report them before analysts and regulators pick them apart.

Wells Fargo analyst Mike Mayo pressed the panelists on what type of productivity increase the average bank could expect to see over the next five years. 1-5%? Rogo’s Gabriel Stengel suggested entry-level analysts would be twice as productive five years from now. Sid Khosla of EY wagered custodian banks could see large efficiency gains of 30-40%, whereas more relationship-driven investment banks experience more incremental change.

In the final session of the day, Patrick Stokes from Salesforce challenged the audience to think differently. He made a comparison to the early days of cloud computing when enterprises debated which use cases were appropriate for on-premise versus off-premises infrastructure, thereby missing the transformative power of the platform itself. “I think the use case is literally every part of your business – every function in your business.”

NOTABLY QUOTABLE

WHAT TRUMP MEANS

The biggest elephant (no party pun intended) in the room was Donald Trump. In separate sessions, PIMCO’s Manny Roman, author and Eurasia Group founder Ian Bremmer and Anthony Scaramucci, of SkyBridge and a politics podcast near you, addressed where the new administration might have the biggest impact…

“There needs to be an industrial policy. Building chips in America will take 10 years. It’s a great idea, but the reality is – to replace Taiwan is incredibly complicated and you’ll never get there in the short-run.” – Roman

“I think overall, I would be surprised to see Google Chrome-type actions originate under a Trump administration. I mean, their initial orientation is less regulation. It’s ripping up the Executive Order on AI.” – Bremmer

“You’ve got to know what [Elon Musk] is doing… you’ve got to know what he’s thinking. Because he’s going to influence Donald Trump, and he’s going to influence policy and decision-making related to AI. That’s #1.” – Mooch

TOP OF THE NEWS

PEAK AI – ALREADY?

For the last 15 years, the story of AI has been to combine increasingly more data with more compute power to make steady gains in performance. AI labs are now seeing diminishing returns, per media reports. Rumors of a “scaling wall” have precipitated concerns about the path to advanced AI. Salesforce CEO Marc Benioff piled on last week, suggesting we’re nearing the “upper limits” of how LLMs can be used.

It may well be. While AI developers have doubled the "scale" of their models every half year – as measured by the number of calculations performed to train the model, called FLOPs, in the chart below – this trend line may indeed be flattening. “It cannot be an infinite scale,” groq Field CTO Chris Stephens said at the Symposium, “I’m sure there’s a limit.”

Scaling Explosion

The training compute of AI models has doubled every 6 months for over a decade

So what does that mean for banks? Stephens and other speakers said the challenge is overstated. Even if returns from scaling diminish, there’s plenty of work to do as is. JPMorganChase is looking to deliver $2 billion of value from AI this year, but most of that comes from traditional machine learning approaches that have existed for decades and don’t rely on the same scale of compute as LLMs, said their CDAO Heitsenrether.

David Wu, who heads AI Product & Architecture Strategy at Morgan Stanley, added that the current models are so strong that a slowdown “is maybe not as big of a concern for us, maybe the next three years are just about getting the right context into the model.”

If training has truly reached its limits, scaling approaches could still evolve to produce stronger models. OpenAI’s o1, unveiled two months ago, showcases “test-time compute,” a method that gives AI the ability to pause and think during problem-solving. OpenAI found that more time spent “thinking” led to better answers on logical tasks like maths or coding. Neither more data nor more training was utilized to take this step forward.

USE CASE CORNER

SUMMARIZE THIS

While Symposium attendees could have made a drinking game out of how many times “meeting summaries” came up as generative AI’s killer app, Dan Jermyn of CommBank came armed with specifics. Not surprising as CommBank featured the second highest number of AI use cases reported with associated outcomes, behind DBS.

Vendor: Amazon Web Services (AWS)

Bank: CommBank

Why it’s interesting: CommBank employs nearly 2,400 call center staff, who field 50,000 customer queries every day. In September, the bank started trialing a generative AI-based chatbot to field common questions, based on data from prior customer interactions. This allows staff to automate answers to common questions with confidence – and expedite answers to more specialized inquiries.

ROI: Generative AI makes this cumbersome process “100x times faster.”

Wait, there’s more: When fine tuning the underlying model, the team was able to identify where humans-in-the-loop were potentially decreasing the readability and effectiveness of AI-generated answers. Safety checks for AI deployments are usually defined for humans confirming the effectiveness of machine work, not the other way around. This implementation does it both ways (to mutual advantage).

Vendor: n/a

Bank: CommBank

Why it’s interesting: Millions of banking customers qualify for various discounts, rebates, and benefits. But the eligibility requirements, especially for government programs, are not always easy to find or decipher. This tool helps customers offset the rising cost of living by automatically finding relevant matches based on a customer’s profile.

ROI: An estimated $1.2 billion worth of value back to bank customers.

Wait, there’s more: Through fine-tuning the underlying model, the team devising this capability drove a 124% uplift in the number of customers engaging with the tool – creating a flywheel effect between how many customers use it and how effective it becomes.

MODEL CORNER

YOUR AI AGENT WILL COOK DINNER – IN A YEAR, MAYBE

“Foundation models” were last year’s obsession. “Agentic AI” is this year’s preoccupation, based on how frequently the term came up last Thursday. While Gen AI creates (i.e. generates) new content in response to prompts, Agentic AI is supposed to act autonomously, learn from experience and operate with minimal oversight (i.e. agency). As one participant joked, it might even make Thanksgiving dinner.

Before we get carried away, banks are adopting a crawl, walk, run approach, said JPMC’s Sumitra Ganesh. For good reasons. Most systems are designed to be reactive; humans define the goal and initial inputs. Domain-specific knowledge is integral to current workflows, meaning that humans in the loop provide feedback and clarifications to improve the output. And perhaps most of all, we’ve not built up nearly enough trust in these systems.

So when might we see these AI Agents in the wild? Given where pilots are now, the “killer app” for our new AI co-workers is about a year off, both Ganesh and Luke Gee of TD Bank suggested.

CODA

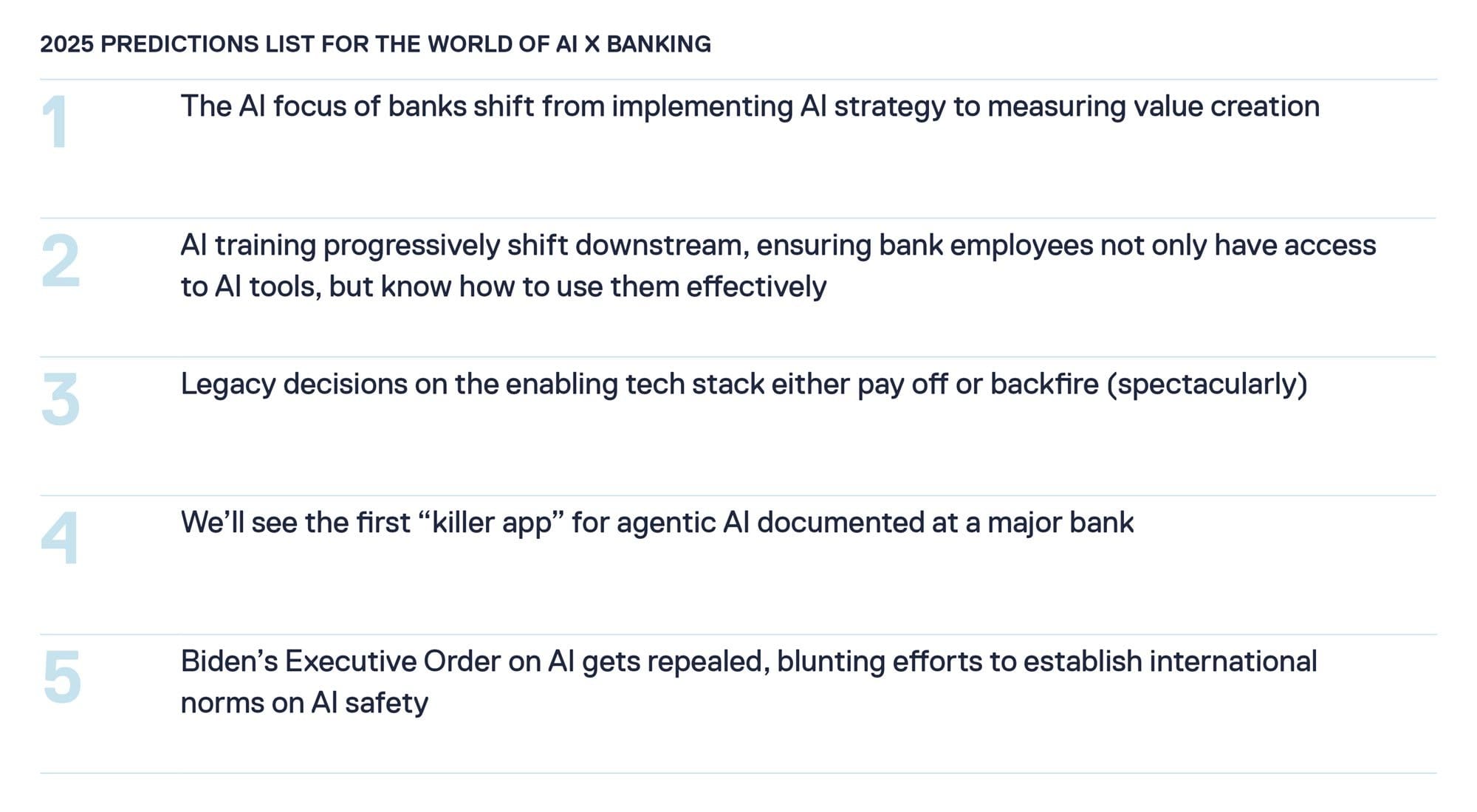

2025 PREDICTIONS

As the curtain came down on Evident’s flagship event, we circled up with our founders to get their hot take on what’s coming up next year. Here’s what’s in their crystal balls.

CORRECTION

The Research Corner section in the Nov-14 issue of The Brief was updated to remove the attribution of RBC to the paper "Exposing Flaws of Generative Model Evaluation" as well as remove a paper that was mistakenly included due to an error in the underlying data source.

THE BRIEF TEAM

- Alexandra Mousavizadeh|Co-founder & CEO|[email protected]

- Annabel Ayles|Co-founder & co-CEO|[email protected]

- Colin Gilbert|VP, Intelligence|[email protected]

- Andrew Haynes|VP, Innovation|[email protected]

- Alex Inch|Data Scientist|[email protected]

- Matthew Kaminski|Senior Advisor|[email protected]

- Sam Meeson|AI Research Analyst|[email protected]